Blog

-

Thorough theoretical discussion of a preliminary idea with an attempt to place it in context but no experimental results.

-

Experimental results comparing two or more existing ideas, followed by a detailed analysis.

-

Presentation of an existing idea in a different way, including illustrations of how the idea applies to new use cases, code, architectures, etc. Attribution should be as clear as possible.

- program optimization (automatic parallelization, tiling, etc.);

- code generation;

- data/communication management on GPUs, accelerators and distributed systems;

- hardware/high-level synthesis for affine programs;

- static analysis;

- program verification;

- model checking;

- theoretical foundations of the polyhedral model;

- extensions of the polyhedral model;

- scalability and robustness of polyhedral compilation techniques.

- Riccardo Mori: Use Official ISL C++ Bindings for Polly Project proposal

-

Pankaj Kukreja: Collect Polyhedral Benchmarks [Project proposal]

Mentored by: Brian Homerding, Michael Kruse

-

Sahil Yerawar: Compiling Chapel with Polly/LLVM [Project proposal]

Mentors: Michael Ferguson, Siddharth Bhat, Philip Pfaffe

-

Andrei Lascu: Metamorphic Testing for Math Libraries [Project proposal]

Mentors: Tobias Grosser, Sven Verdoolaege

-

Remove support applying

codegento a set inisccThe codegen operation prints an AST generated from a schedule, given either as a schedule tree or a (union) map. As a convenience, applying codegen to a set would result in codegen being applied to the identity mapping on the set. This seems to be causing confusion about the nature of the codegen operation. Drop the magic such that users are aware that a schedule is needed to perform AST generation. They can still explicitly call the “identity” operation on a set if they really want the old behavior.

-

Return statements in summary functions

Return statements are now allowed at the end of a summary function. The accesses that appear in the argument of the return statement are treated like any other read. Return statements in other positions are currently not allowed as that would require special treatment for turning perceived must-writes that may get short-cut by the return into may-writes.

For example, the following input is now accepted.

int select(int n, int a[n], int i) { return a[i]; } void foo(int n, int a[n]) { #pragma scop for (int i = 0; i + 1 < n; ++i) { a[i] = select(n, a, i) + select(n, a, i + 1); } #pragma endscop } -

Return statements in inlined functions

Return statements are now allowed at the end of an inlined function. This enables support for calls to inlined functions in nested expressions. The return statement in the inlined function is simply replaced by a write to a freshly allocated variable.

For example, the following input is now accepted.

inline int select(int n, int a[n], int i) { return a[i]; } void foo(int n, int a[n]) { #pragma scop for (int i = 0; i + 1 < n; ++i) { a[i] = select(n, a, i) + select(n, a, i + 1); } #pragma endscop } -

Improve coalescing

In particular, the following set descriptions are now also coalesced

{ [a, b, c] : (b = -1 + a and 0 < a <= 3 and 9*floor((-4a + 2c)/9) <= -3 - 4a + 2c) or (a = 4 and b = 3 and 9*floor((-16 + 2c)/9) <= -19 + 2c) }; { [x, y] : 2y = -x and x <= 0 or x <= -1 and 2y <= -x - 1 and 2y >= x - 1 }; { [a] : (a <= 0 and 3*floor((a)/3) = a) or (a < 0 and 3*floor((a)/3) < a) }; { [a, b] : a <= 1024 and b >= 0 and ((-31 - a + b <= 32*floor((-1 - a)/32) <= -33 + b and 32*floor((-1 - a)/32) <= -16 + b + 16*floor((-1 - a)/16)) or (2 <= a <= 15 and b < a)) }; { [a] : a > 0 and ((16*floor((a)/16) < a and 32*floor((a)/32) < a) or a <= 15) }; { [a, b, c, d] : (-a + d) mod 64 = 0 and a <= 8 and b <= 1 and 10 - a <= c <= 3 and d >= 5 and 9 - 64b <= d <= 70; [a, b = 1, c, d] : (-a + d) mod 64 = 0 and a <= 8 and c >= 4 and 10 - a <= c <= 5 and 5 <= d <= 73 - c }; [n, m] -> { S_0[i] : (-n + i) mod 3 = 0 and m >= 3 + n and i >= n and 3*floor((2 + n + 2m)/3) <= n + 3m - i; S_0[n] : n <= m <= 2 + n }; { [a, b] : exists (e0: 0 <= a <= 1 and b >= 0 and 2e0 >= -5 + a + 2b and 2e0 >= -1 + a + b and 2e0 <= a + b); [a, b] : exists (e0: 0 <= a <= 1 and 2e0 >= -5 + a + 2b and 2e0 >= -1 - a + b and 2e0 <= -a + b and 2e0 < -a + 2b) }; { [i, j, i - 8j] : 8 <= i <= 63 and -7 + i <= 8j <= i; [i, 0, i] : 0 <= i <= 7 }; { [a, b] : a >= 0 and 0 <= b <= 1 - a; [1, 1] }; { [a, b] : a, b >= 0 and a + 2b <= 2; [1, 1] }; -

Improve parametric integer programming

If a tableau used for parametric integer programming contains a purely parametric row with an indeterminate sign, then this means that a solution only exists in the part of the context where this constraint holds. Such constraints are now transferred to the context first such that they can be used to more accurately determine the possible signs of other rows and to possible avoid further splits.

In particular, calling

isl_set_dim_maxon the set[P, N] -> { [a] : a < N and a >= 0 and N > P and a <= P and N > 0 and P >= 0 }now results in an affine expression defined over a single part of the domain.

-

Try harder to avoid large coefficients in scheduler

For the Pluto-like scheduler, the main cause of large coefficients was loop coalescing and this was already resolved in isl 0.17. For the Feautrier scheduler, which is used as a backup for the Pluto-like scheduler, loop coalescing was also handled, but for this scheduler, loop coalescing is not the only main source of large coefficients. In particular, if the solution to the LP problem constructed by the Feautrier scheduler is not integral, then the numerators with respect to a common denominator are used as schedule coefficients. These numerators can be arbitrarily large even if the rational values themselves are small. The solution is to continue the search until an integral solution is found. Second, the Feautrier scheduler tries to carry as many dependences as possible (as instructed), which tends to result in schedules with coefficients proportional to the number of statements. This second problem is largely resolved by first trying to only carry self-dependences. This second change also removes some spurious shifts.

-

Handle coscheduled must-sources in dependence analysis

The behavior in case of coscheduled must-sources was undefined. Define the behavior to kill all earlier sources, but not any coscheduled may-sources or must-sources. Also, a dependence derived from a must-source that is coscheduled with any other source is never considered a must-dependence.

-

Support explicit kills in dependence analysis

In fact, make kills the primary interface, with must-sources considered to be both may-sources and kills, since the effect of kills is actually easier to explain than that of must-sources.

-

Drop deprecated

isl_int, band forests and additional functions -

Annanay Agarwal: Enable Polyhedral Optimizations in XLA through LLVM/Polly [Project proposal]

Mentored by: Brian Retford, Michael Kruse, Chandan Reddy

-

Nicolas Bonfante: Maximal static expansion for efficient loop parallelization on GPU [Project proposal]

Mentors: Andreas Simbürger, Michael Kruse

-

Malhar Thakkar: ISL Memory Management Using Clang Static Analyzer [Project proposal]

Mentors: Devin Coughlin, Sven Verdoolaege, Alexandre Isoard

-

Sanjay Srivallabh Singapuram Enabling Julia to target GPGPUs using Polly [Project proposal]

Mentors: Tobias Grosser

-

Support hybrid tiling

Basic hybrid tiling can now be enabled using the

--hybridoption. It currently only applies to stencils with a single statement.For example, hybrid tiling can be applied to the input (say

heat-2d.c)#define N 3072 #define T 512 void heat(float A[2][N][N]) { #pragma scop for (long t = 0; t < T; t++) { for (long i = 1; i < N - 1; i++) for (long j = 1; j < N - 1; j++) A[(t+1)%2][i][j] = (1/9.0f) * (A[t%2][i][j] + A[t%2][i-1][j-1] + A[t%2][i-1][j] + A[t%2][i-1][j+1] + A[t%2][i][j-1] + A[t%2][i][j+1] + A[t%2][i+1][j-1] + A[t%2][i+1][j] + A[t%2][i+1][j+1]); } #pragma endscop }by invoking

ppcgas followsppcg --hybrid --sizes="{kernel[i]->tile[2,2,128];kernel[i]->block[1,128]}" \ --isolate-full-tiles --unroll-copy-shared --unroll-gpu-tile heat-2d.c -

Seven LLVM LNT builds:

- Polly Standard

-O3 -mllvm -polly - Polly Autopar

-O3 -mllvm -polly -mllvm -polly-parallel -lgomp - Polly Extended Coverage

-O3 -mllvm -polly -mllvm -polly-process-unprofitable - Polly Performance

-O3 -mllvm -polly - Polly “Before-Vectorizer

-O3 -mllvm -polly -mllvm -polly-position=before-vectorizer - Polly “Before-Vectorizer” Extended Coverage

-O3 -mllvm -polly -mllvm -polly-position=before-vectorizer -mllvm -polly-process-unprofitable - Polly “Before-Vectorizer” Only Scop-Detection

-O3 -mllvm -polly -mllvm -polly-position=before-vectorizer

- Polly Standard

-

Detect and report unbalanced pairs of scop/endscop pragmas

For example, in the following code fragment, the

#pragma scopappears inside thefor-loop, while the#pragma endscopappears outside of the loop. Previous versions would silently ignore such regions. Now, a warning is produced.void foo(int a[10]) { for (int i = 0; i < 10; ++i) #pragma scop a[i] = i; #pragma endscop } -

Take into account assumptions during extraction

Consider the program fragment below.

int f(int k) { int a; #pragma scop __builtin_assume(k >= 0); a = k % 16; #pragma endscop return a; }Without the

__builtin_assume, pet would have to assume thatkmay be either positive or negative, resulting in a piecewise expression fora. The extra assumption allows the expression to be simplified. Before, pet would only perform such simplifications at the very end, now they are also performed during the extraction process, potentially speeding up this extraction process in the presence of multiple modulo or integer division expressions. -

Improve elimination of redundant existentially quantified variables

In particular, finally implement the Omega test in isl. A more powerful (and more expensive) version of the Omega test was supposed to have been implemented already, but it missed some cases. Those cases are now also handled. For example, the set description

{ [m, w] : exists a : w - 2m - 5 <= 3a <= m - 2w }is now simplified to{ [m, w] : w <= 1 + m }. -

Improve coalescing

In particular, the following set descriptions are now also coalesced

{ [a, b] : 0 <= a <= 2 and b >= 0 and ((0 < b <= 13) or (2*floor((a + b)/2) >= -5 + a + 2b)) } { [a] : (2 <= a <= 5) or (a mod 2 = 1 and 1 <= a <= 5) } { [y, x] : (x - y) mod 3 = 2 and 2 <= y <= 200 and 0 <= x <= 2; [1, 0] } { [x, y] : (x - y) mod 3 = 2 and 2 <= y <= 200 and 0 <= x <= 2; [0, 1] } { [1, y] : -1 <= y <= 1; [x, -x] : 0 <= x <= 1 } { [1, y] : 0 <= y <= 1; [x, -x] : 0 <= x <= 1 } { [x, y] : 0 <= x <= 10 and x - 4*floor(x/4) <= 1 and y <= 0; [x, y] : 0 <= x <= 10 and x - 4*floor(x/4) > 1 and y <= 0; [x, y] : 0 <= x <= 10 and x - 5*floor(x/5) <= 1 and 0 < y; [x, y] : 0 <= x <= 10 and x - 5*floor(x/5) > 1 and 0 < y } { [x, 0] : 0 <= x <= 10 and x mod 2 = 0; [x, 0] : 0 <= x <= 10 and x mod 2 = 1; [x, y] : 0 <= x <= 10 and 1 <= y <= 10 } -

Improve parametric integer programming

Based on analysis of a test case reported by Wenlei Bao, a major cause of the long run-time turned out to be the symmetry detection performed internally. The detected symmetry is exploited by replacing several upper bounds with a single additional parameter. This helps to speed up lexicographic optimization of some inputs with lots of symmetry, but causes a significant slow-down on this particular input because the parameters that appear in the replaced constraints may also appear in other constraints and through the replacement, the connection is lost. The symmetry detection is now only applied in cases where this problem does not occur.

A further analysis showed that quite some time was spent in exploring parts of the domain where the optimum is undefined. In a pure lexicographic optimization operation, the user is not interested in this part of the domain. The procedure was changed to start from the actual domain of the input rather than the corresponding universe such that the complement no longer needs to be explored at all. A possible down-side is that this actual domain may involve existentially quantified variables, which increases the dimension of the context and which may also appear in the final result. Special care therefore needs to be taking for the case where lexicographic optimization is being used for its side-effect of eliminating existentially quantified variables.

The upshot is that for the input

{ S_2[i, k, j] -> [o0, o1, o2, o3] : exists (e0 = floor((o1)/8): 8e0 = o1 and k <= 255 and o0 <= 255 and o2 <= 255 and o3 <= 255 - j and 7o3 >= 255 - j and 7o3 <= 7 - i and 240o3 <= 239 + o0 and 247o3 <= 247 + k - j and 247o3 <= 247 + k - o1 and 247o3 <= 247 + i and 248o3 >= 248 - o1 and 248o3 <= o2 and 254o3 >= i - o0 + o1 and 254o3 >= -o0 + o1 and 255o3 >= -i + o0 - o1 and 1792o3 >= -63736 + 257o1) }the computation time went down from

real 6m36.786s user 6m36.032s sys 0m0.128sto

real 0m0.040s user 0m0.032s sys 0m0.000s -

preserve

isolateoption inisl_schedule_node_band_splitThis is needed to simplify the implementation of full/partial tile separation for hybrid tiling in PPCG.

-

Print AST nodes in YAML format

-

Minor improvements to Python bindings

-

Use PPCG specific macro names in generated code

The names for macros inside isl AST expression now have a

ppcg_prefix to avoid conflicts with other symbols. -

Complete transition to schedule trees

In particular, the last remaining use of nested AST generation has been removed. AST expressions for affine expressions are now also built within the context where they will appear, allowing them to exploit this context for simplification purposes.

-

Maximize coincidence by default

In particular, turn on

--isl-schedule-maximize-coincidenceby default. -

Map arrays with constant index expressions to private memory

This allows some code to be mapped to an accelerator that could not be mapped before because of excessive scheduling constraints.

-

Optionally group chains of statements

Statements that are executed in sequence in the input code and where the second completely depends on the first can now be grouped together. In particular, pairs of consecutive statements where the first writes to a scalar and the second reads from that scalar are grouped together. This reduces the number of statements, reducing the computation time of the scheduler, while not giving up too much scheduling freedom.

-

Push affine conditions into index expressions

If the code contains an expression of the form

c ? A[f] : A[g]with c an affine condition, then replace it by

A[c ? f : g]This reduces the number of accesses and, more importantly, includes the information of when the access is performed in the index expression. This allows dependence analysis to take into account this information, reducing the risk of introducing spurious dependences.

-

Properly support undeclared loop iterators

When the loop iterator of a

forloop is not declared inside theforloop itself, the value of this loop iterator persists after the execution of the loop and could in principle be used by subsequent statements. Introduce assignments to the corresponding variable that ensure the variable contains the correct value after the execution of the loop. -

Optionally combine SCCs incrementally in the scheduler

Within each component of the dependence graph, the isl scheduler tries to schedule all statements together by default. By turning off the

schedule-whole-componentoption, the isl scheduler will first consider each strongly connected component separately and then incrementally combine them. This should reduce the scheduling time and allows for more finegrained control over which SCCs should be combined. In particular, the coincidence maximization below depends on the incremental scheduler. -

Optionally maximize coincidence in the scheduler

If the

schedule-whole-componentoption is turned off, then turning on theschedule-maximize-coincidenceoption will make isl refuse to combine SCCs if this reduces the number of coincident band members in the schedules for any of the SCCs. -

Optionally avoid loop coalescing in the scheduler

By default, isl will now try and prevent or recover from schedules that represent loop coalescing. Loop coalescing is undesirable because it results in a skewed view of the schedule dimension, leading to single instance band members, and because the large coefficients can cause difficulties in later step, including complicated generated code.

For example, a schedule of the form

domain: "{ C[i0, i1] : 2 <= i0 <= 3999 and 0 <= i1 < i0 }" child: schedule: "[{ C[i0, i1] -> [(3998i0 + i1)] }]" child: schedule: "[{ C[i0, i1] -> [(i0)] }]" permutable: 1 coincident: [ 1 ]will be rejected in favor of the schedule

domain: "{ C[i0, i1] : 2 <= i0 <= 3999 and 0 <= i1 < i0 }" child: schedule: "[{ C[i0, i1] -> [(i0)] }]" child: schedule: "[{ C[i0, i1] -> [(i1)] }]" -

Fix handling of nested integer divisions

Nested integer divisions were not handled correctly in some operations, leading to incorrectly generated code in corner cases.

-

Optionally detect min/max expressions during AST generation

An

isl_pw_affwith several pieces often represents a minimum of maximum expression. Detect some of these cases insideisl_ast_build_expr_from_pw_affand construct an explicit minimum or maximum expression on success.For example, construct

max(0, M)instead ofM <= 0 ? 0 : M. -

Minor AST generator improvements

In particular, reduce the number of pieces during the combination of pairs of piecewise affine expressions and move the most complicated piece last such that it does not need to be AST generated. When combining a pair of expressions of m and n pieces, the original implementation could produce a result with 2 * (m + 1) * (n + 1) pieces. The new implementation creates a result with at most m + n pieces.

-

Simplify stride constraints

isl_map_gistwould only simplify away stride constraints if they could be removed completely and if they happened to be formulated in exactly the same way in the context. Stride constraints are now simplified more generally. For example,[n] -> { [x] : x = n && x mod 32 = 0 } % [n] -> { [x] : x mod 32 = 0 }now produces

[n] -> { [x = n] }, and{ [x] : x mod 6 = 0 } % { [x] : x mod 3 = 0 }now produces

{ [x] : x mod 2 = 0 }. -

Improve support for expansions in schedule trees

These changes are needed to support statement grouping in PPCG.

-

Fix live-out computation

Due to a bug, the live-out computation would consider all elements written by a write to have been killed as soon as one of those elements had effectively been killed. This could in rare cases result in some statement instances mistakenly getting removed during dead code elimination.

-

Optionally compute schedule for C target

The original support for the C target only added the basic infrastructure and did not even allow the input code to be rescheduled. Now, this backend will also (optionally) reschedule the code, but support for this target is still fairly basic.

-

Create single kernel for non-permutable subtree

If some parts of the code that is mapped to the device do not have any parallelism, then a kernel would be launched for each instance of that part of the code, even if that would result in loop on the host that only launches such kernels. Now, a single kernel is invoked for the entire non-parallel part.

-

Support more recent versions of NTL

In particular, assume NTL has been compiled in ISO mode, which has been the default ever since NTL 5.4, and handle a change in internal representation of NTL.

-

Support more operations in iscc

These additional operations are needed for some of the examples in the Presburger Formulas and Polyhedral Compilation tutorial.

-

Initialize compiler builtins

Michael Kruse contributed a patch to initialize builtins. Without this initialization, builtins are treated as implicitly defined functions and are therefore assumed to have return type

int, resulting in compiler warnings or even errors when those builtins are used. -

Rename

pet_scopaccessorsThe original names where too focused on how the accessors were implemented.

-

Support variable renaming

This allows the input to have several locally declared variables with the same name.

-

Support inlining of outermost call expressions

-

Add preliminary Python bindings

The python bindings are needed for some of the examples in the Presburger Formulas and Polyhedral Compilation tutorial.

-

Add 32 bit integer optimization for IMath

Until isl 0.15 the IMath backend, which serves as MIT licensed alternative to the default libgmp backend, was not very well optimized and its result could result in isl being 2-4x slower. Michael Kruse contributed a new small-integer-optimization to isl which improved the performance of the IMath backend to be now around 30% faster than libgmp.

-

Improved AST generation quality

Various smaller changes result in generally improved AST generation quality.

-

Add

isl_union_flow_get_full_may_dependenceandisl_union_flow_get_full_must_dependenceThese functions include the accessed data elements that are responsible for the dependence in their results.

-

Improvements to Python bindings

The isl provided python bindings have been extended to support the full set of polyhedral compilation examples that is discussed in our new Presburger Formulas and Polyhedral Compilation tutorial.

-

Improved printing of isl sets and maps

Until isl 0.15 the constraints in an isl set and map have been printed in the (rather random) order they have been added to the data structure. isl now makes an effort to print constraints in a human readable way. This results in both shorter constraints, but also more predictable output in case implementation details change.

Before:

{ S[i0, i1] : i0 <= 97 and i0 >= 0 and i1 <= 99 and i1 >= 1 }After:

{ S[i0, i1] : 0 <= i0 <= 97 and 0 < i1 <= 99 }

October 07, 2022

IMPACT 2023 Call for papers and presentations

This is a CALL FOR PAPERS for

IMPACT 2023

13th International Workshop on Polyhedral Compilation Techniques: impact-workshop.org

held in conjunction with HiPEAC 2023 in Toulouse, France.

Important Dates

| Submission: | November 24, 2022 (AoE) |

| Author notification: | December 19, 2022 |

| Final version due: | January 12, 2023 (AoE) |

| Workshop: | January 16, 2023 |

For this edition we accept two kinds of submissions: paper submissions and presentation-only submissions.

Overview

Polyhedral compilation techniques receive more attention than ever from both researchers and practitioners. Thanks to a unified formalism for parallelization and memory optimization, polyhedral techniques play a central role in a wide range of domain-specific languages and optimizing compilers, with high impact in competitive areas such as machine learning, scientific computing and media processing.

IMPACT is a unique event focusing on polyhedral compilation. The workshop brings together researchers and practitioners for a high-quality one-day event including a keynote, paper presentations, and work-in-progress discussions. The workshop has a well established tradition of high quality, extensive peer reviews, with a PC balancing core polyhedral expertise with applications and broader computing systems research.

We welcome both theoretical and experimental papers and presentations on all aspects of polyhedral compilation. We also welcome submissions describing preliminary results, crazy new ideas, position papers, experience reports, education material, and available tools, with an aim to stimulate discussions, collaborations, and advances in the field. The following illustrate potential IMPACT papers:

Topics of interest include, but are not limited to:

Paper Submission

Paper submissions should not exceed 10 pages (recommended 8 pages), excluding references, formatted as per ACM SIGPLAN proceedings format. Short paper submissions, even with only 2 pages, are welcome as well.

Use version 1.54 or above of the following templates to prepare your manuscript:

https://www.acm.org/publications/proceedings-template

Make sure to use the “sigplan” subformat. Visit

http://sigplan.org/Resources/Author/

for further information on SIGPLAN manuscript formatting. NOTE: older versions of the article template use smaller fonts for submission, please double-check that you are using the recent style file (in particular, various LaTeX distributions ship older versions of the acmart style file, please download the most recent one from ACM).

Submissions should use PDF format and be printable on US Letter or A4 paper. Please submit your manuscripts through EasyChair and while selecting “Paper” as submission type:

https://easychair.org/conferences/?conf=impact2023

Proceedings will be posted online. If the final version of an accepted paper does not sufficiently address the comments of the reviewers, then it may be accompanied by a note from the program committee. Publication at IMPACT will not prevent later publication in conferences or journals of the presented work. However, simultaneous submission to IMPACT and other workshop, conference, or journal is often prohibited by the policy of other venues. For instance, a manuscript overlapping significantly with IMPACT submission cannot be submitted to PLDI 2023 or any other overlapping SIGPLAN event.

Please make sure that at least one of the authors can attend the workshop if your work is accepted to give a 25 minutes presentation of your work.

Presentation-only Submission

Submit a summary of your presentation through EasyChair:

https://easychair.org/conferences/?conf=impact2023

Submissions have no formatting requirements but should use PDF formal. Choose “Presentation-only” when submitting.

The presentation should take no longer than 25 minutes to present. Please make sure that at least one of the authors can attend the workshop if your work is accepted.

Committees

Organizers and Program Chairs

| Michael Kruse | (Argonne National Laboratory, USA) |

| Ramakrishna Upadrasta | (IIT Hyderabad, India) |

Contact: mkruse@anl.gov, ramakrishna@cse.iith.ac.in

Program Committee

| Lorenzo Chelini | (Intel, Switzerland) |

| Albert Cohen | (Google, France) |

| Alexandre Eichenberger | (IBM, USA) |

| Renato Golin | (Intel, UK) |

| Tobias Grosser | (University of Edinburgh, UK) |

| Vinod Grover | (NVIDIA, USA) |

| Guillaume Iooss | (INRIA, France) |

| Andreas Kloeckner | (UIUC, USA) |

| Martin Kong | (Ohio State University, USA) |

| Antoine Miné | (Sorbonne Université, France) |

| Diana Picus | (Linaro, Sweden) |

| Arjun Pitchanathan | (University of Edinburgh, UK) |

| Sanjay Rajopadhye | (Colorado State University, USA) |

| Daniele Giuseppe Spampinato | (Huawei, Switzerland) |

| Sven Verdoolaege | (Cerebras, Belgium) |

| Dave Wonnacott | (Haverford, USA) |

| Jie Zhao | (State Key Laboratory Zhengzhou, China) |

August 23, 2021

Final Report for Google Summer of Code 2021

The GSoC 2021 coding phase officially ended on August 16. We congratulate Riccardo Mori for his participation on the project “Use official isl C++ bindings for Polly”!

We thank Riccardo for all his contributions and hope that we could get you interested in developing for LLVM, Clang, Polly and/or isl and maybe that you continue contributing to these projects.

[GSoC Logo source: https://developers.google.com/open-source/gsoc/resources/media]

September 19, 2018

LLVM Social - Compilers meet Theorem Provers

We had an outstanding social event on September 13 themed Compilers meet Theorem Provers, with more than 45 attendees and three outstanding speakers.

August 15, 2018

Final Reports for Google Summer of Code 2018

The GSoC 2018 coding phase officially ended yesterday. We congratulate all students who particpiated!

Google requires all students to preparte a web page summarizing the work they did for their project. Here are the reports for the projects supported by Polly Labs:

We thank all students for their contributions and all mentors for their support. We hope that we could get you interested in developing for LLVM, Clang, Polly and/or isl and maybe that you continue contributing to these projects.

[GSoC Logo source: https://developers.google.com/open-source/gsoc/resources/media]

March 10, 2018

PPCG 0.08 has been released

PPCG 0.08 was released containing minor fixes.

March 10, 2018

barvinok 0.41 has been released

barvinok 0.41 was released containing only a single minor change:

March 07, 2018

pet 0.11 has been released

pet 0.11 was released containing a couple of changes:

March 03, 2018

isl 0.19 has been released

isl 0.19 was released containing various improvements:

February 12, 2018

Polly Labs accepted for Google Summer of Code 2018

We are happy to announce that Google selected Polly Labs as one of the mentoring organizations for Summer of Code 2018. Our page at Google as participating organization is here. Like 2017 we will assist students to enter open source development for polyhedral optimization and compilation.

If you are currently enrolled at a university or college and you are interested in contributing in the field of polyhedral compilation, we invite you to apply with a project proposal at Google. The first step is to contact us on one of our projects’ mailing list. See the 2018 ideas and information page for more detailed instructions and ideas for projects. Hints for writing a good proposal are found on our How-to-apply page.

Also see the post for GSoC 2017.

[GSoC Logo source: https://developers.google.com/open-source/gsoc/resources/media]

January 23, 2018

Extending Pluto-style Polyhedral Scheduling with Consecutivity presentation at IMPACT 2018

Sven Verdoolaege presented the Extending Pluto-style Polyhedral Scheduling with Consecutivity paper at IMPACT 2018.

Abstract:

The Pluto scheduler is a successful polyhedral scheduler that is used in one form or another in several research and production compilers. The core scheduler is focused on parallelism and temporal locality and does not directly target spatial locality. Such spatial locality is known to bring performance benefits and has been considered in various forms outside and inside polyhedral compilation. For example, the Pluto compiler has some support for spatial locality, but it is limited to a post-processing step involving only loop interchange. Consecutivity is a special case of spatial locality that aims for stride-1 accesses, which can be useful for constructing burst accesses and for vectorization. Stride-1 accesses have been targeted by an approach based on one-shot scheduling, but it is fairly approximative and not directly transferable to a Pluto-style scheduler. This paper describes an approach for consecutivity that is integrated in a Pluto-style polyhedral scheduler, as implemented in isl. Both intra-statement and inter-statement consecutivity is considered, taking into account multiple references per statement and the integration into a component based incremental scheduler.

November 10, 2017

Consecutivity in the isl Polyhedral Scheduler report made available

The report Consecutivity in the isl Polyhedral Scheduler, describing an extension of the isl scheduler for targeting consecutive accesses, has been made available.

August 29, 2017

Final Reports for Google Summer of Code 2017

The GSoC 2017 coding phase officially ended today. We congratulate all students who participated!

Students prepared a page for their final report summarizing the work they did for their project. These are the reports for the students that were supported by Polly Labs:

We thank all students for their contributions and all mentors for their support. We hope that we could get you interested in developing for LLVM, Clang and/or Polly and maybe that you continue contributing to these projects.

[GSoC Logo source: https://developers.google.com/open-source/gsoc/resources/media]

June 23, 2017

Scheduling for PPCG report made available

The report Scheduling for PPCG, describing the isl scheduler as used by PPCG, has been made available.

February 27, 2017

Polly Labs accepted for Google Summer of Code 2017

NEWS: Google selected Polly

Labs as mentoring organization for

Google Summer of Code 2017! You are an undergraduate student or doing your

doctorate? Join us to work on polyhedral compilation this summer! Here the link

directly to our Polly Labs GSoC Ideas and Information

Page.

Polyhedral compilation is so much more than just the LLVM Polly project and with Polly Labs as a Google Summer of Code organization we now have a new tool that allows us to host Summer of Code students to work on polyhedral modeling projects that go even beyond the scope of LLVM and gcc. LLVM and gcc have been and are in the future great places to host core compiler projects that use polyhedral compilation, but our community is interested in a lot more. Work on the PPCG polyhedral GPU code generator, the isl math library, as well the PRL run-time library, but also many other research projects (Apollo, Polly-JIT, …) benefits the greater polyhedral community, but is certainly outside of the scope of our traditional host organizations. The Polly Labs GSoC effort provides a home for such kind of projects and complements the traditional GSoC work we do in the context of gcc, LLVM, or Julia.

Google Summer of Code has a long history in supporting polyhedral compilation. Polly itself started as a GSoC project and already Polly’s older sibling, GCC/Graphite, took part in the very first GSoC years, with Tobias Grosser (today Polly Labs and ETH Zurich) being the first student in 2008 (mentored by Sebastian Pop). Only one year later, Hongbin Zheng (today lead of Xilinx High-Level Synthesis Team) worked as GSoC student on the first implementation of the Polly frontend and put together with Tobias Grosser the first end-to-end version of Polly. Over the years, many GSoC students contributed directly or indirectly to advances in polyhedral compilation or infrastructure we build on today. LLVM’s original PTX backend was developed as part of Google Summer of Code 2011 by Justin Holewinski (today NVIDIA), and today drives the GPU generation of Polly-ACC, for which again foundations were laid during GSoC 2012 and 2014 by Yabin Hu. Over the years, many students worked on Google Summer of Code projects and contributed significant pieces to polyhedral compilation in general and Polly specifically and we hope that you might be the next one. Riyadh Baghdadi (now MIT, before ENS Paris), another former GSoC student working on GCC/graphite, wrote down some great advices on “how to write a great GSoC proposal”. Check them out to follow in the footsteps of many successful GSoC students.

We already have an interesting set of polyhedral experts, who kindly agreed to be mentors in projects of their expertise and we are hopeful to convince more. As a student, we invite you to apply with your own project ideas or to expand one of the ideas we suggested on our Google Summer of Code page. This pages also contains information about possible mentors, how to apply as a student, and generally how to get started with the Google Summer of Code process. We are looking forward to work with you on exciting Google Summer of Code projects!

[GSoC Logo source: https://developers.google.com/open-source/gsoc/resources/media]

February 24, 2017

barvinok 0.40 has been released

barvinok 0.40 was released mainly fixing some minor bugs. It will also serve as a basis for more invasive changes in the next release.

February 09, 2017

PPCG 0.07 has been released

PPCG 0.07 was released containing one main change:

January 18, 2017

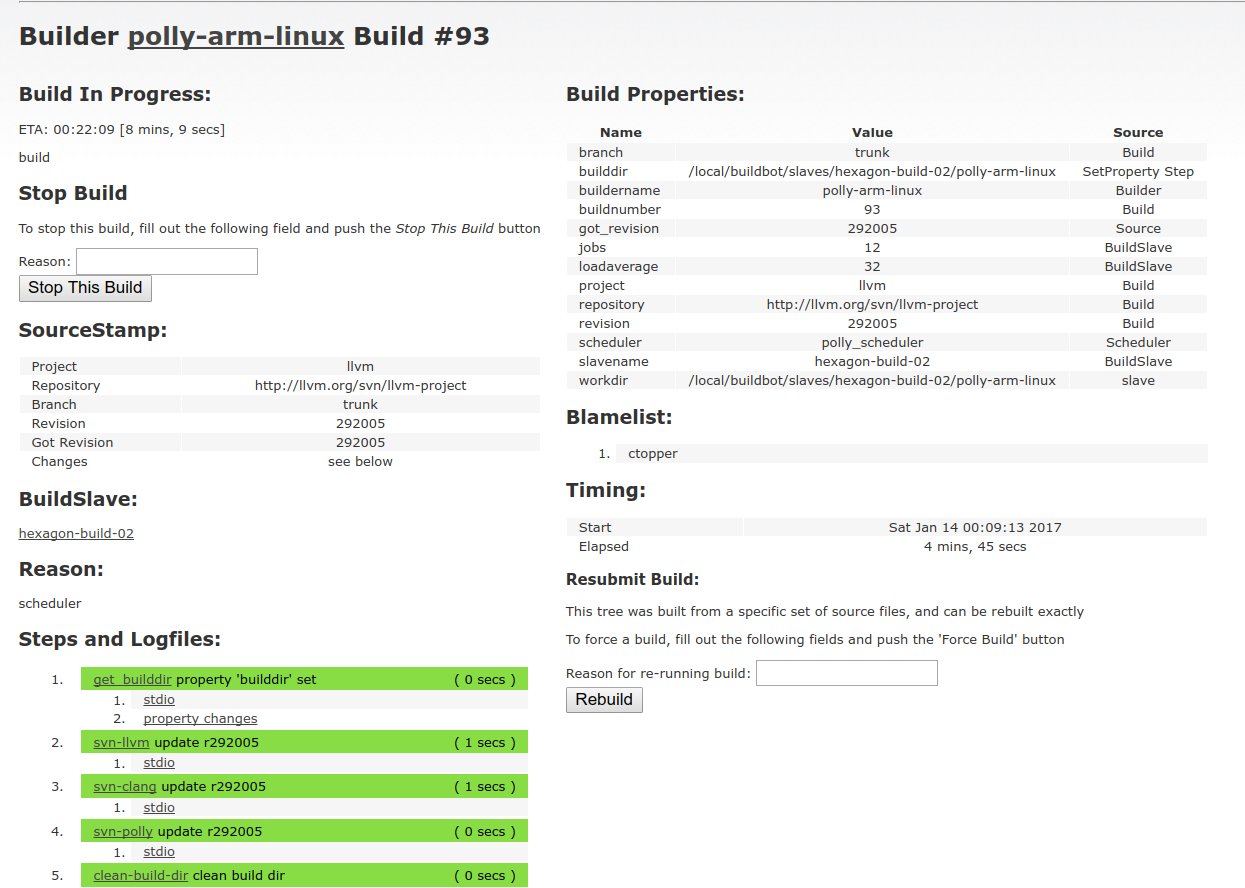

New Polly ARM64 buildbot hosted by Qualcomm

Polly gained a new ARM64 Buildbot sponsored by Qualcomm Innovation Center, which adds ARM64 platform coverage to Polly’s continious integration testing:

With this buildbot Polly increases its buildbot farm to nine builders. Polly runs the following builders:

Besides the standard -O3 -mllvm -polly configuration, Polly regularly tests its ability to generate parallel code, and also runs in an “extended coverage mode” called -mllvm -polly-unprofitable which forces Polly to transform each trivial loop it encounteres to provide higher test coverage on Polly’s transformation engine by transforming even code where we do not expect any performance gains. Finally, with our Polly Performance bot, we regularly report performance results to the LLVM performance tracking infrastructure hosted at http://llvm.org/perf/.

Besides the above set of buildbots, we also host a set of “Before-Vectorizer” build bots, which keep track of our efforts to move Polly into the LLVM pass pipeline with the goal to remove the need for additional canonicalization passes when running Polly. Such passes always pre-transform the IR that is fed to the LLVM standard pass pipeline and can consequently result in random difficult to avoid performance changes. After moving Polly into the Pass pipeline, we expect Polly to only affect performance in cases where our performance model predicts a clear benefit. You can find more information about this topic in the Polly Documentation.

Polly is also regularly tested externally. Publicly accessible are the FFmpeg FATE tests. More tests are run in-house by different users.

December 21, 2016

pet 0.10 has been released

pet 0.10 was released containing a couple of changes:

December 20, 2016

isl 0.18 has been released

isl 0.18 was released containing various improvements:

August 30, 2016

IMPACT 2017 is colocated with HiPEAC 2017 in Stockholm

Abstract

IMPACT 2017, the 7th International Workshop on Polyhedral Compilation, will be taking place January 23 - 25, 2017 in Stockholm colocated with the HiPEAC conference. IMPACT is probably the venue to meet the full polyhedral compilation community and a great place to discuss your latest ideas on polyhedral compilation.

With Tobias as co-chair, Polly Labs contributes to the organization of IMPACT 2017.

The following is the official call for papers:

This is a CALL FOR PAPERS for

IMPACT 2017

7th International Workshop on

Polyhedral Compilation Techniques

http://impact.gforge.inria.fr/impact2017/

held in conjunction with HiPEAC 2017 (Jan 23-25, 2017)

Stockholm, Sweden.

======================================

IMPORTANT DATES:

Abstract submission: October 21, 2016 (AoE)

Paper submission: October 28, 2016 (AoE)

Author notification: December 02, 2016

Final version due: December 16, 2016

Workshop date: TBD

======================================

OVERVIEW:

Polyhedral techniques have gained attention due to the rise of

multi-core processors and other architectures that require parallelism

and more complex schedules for data locality. At the heart of the

polyhedral model is an abstraction of programs that enables powerful

analysis and scheduling of applications. Recent developments in the

polyhedral model research area includes automatic parallelization

targeting various platforms, program verification, and hardware

synthesis. IMPACT is a unique workshop aimed to bring together

researchers and practitioners interested in polyhedral techniques to

exchange ideas. This year's IMPACT will be held in conjunction with

HiPEAC as a one-day workshop including technical paper presentations,

panel discussions, and possibly a keynote.

We welcome both theoretical and experimental papers on all aspects of

polyhedral compilation and optimization. We also welcome submissions

describing preliminary results, crazy new ideas, position papers,

experience reports, and available tools, with an aim to stimulate

discussions, collaborations, and advances in the field. The following

illustrate potential IMPACT papers:

- Discussion of a preliminary idea with an attempt to place

it in context but no experimental results.

- Experimental results comparing two or more existing ideas.

- Presentation of an existing idea in a different way including

illustrations of how the idea applies in current codes.

Attribution should be done as well as possible.

Topics of interest include, but are not limited to:

- program optimization (automatic parallelization, tiling, etc.)

- code generation

- data/communication management on GPUs, accelerators and

distributed systems

- hardware/high-level synthesis

- static analysis

- program verification

- model checking

- theoretical foundations of the polyhedral model

- extensions of the polyhedral model

- scalability and robustness of polyhedral compilation techniques

- autotuning

- application case studies

- tool demonstration

SUBMISSION:

Submissions should not exceed 8 pages (recommended 6 pages), excluding

references, formatted as per ACM proceedings format. Please use the

following template when preparing your manuscript:

http://www.acm.org/sigs/publications/proceedings-templates

Submissions should be in PDF format and printable on US Letter or A4

sized paper. Please submit through EasyChair at:

https://easychair.org/conferences/?conf=impact17

Proceedings will be posted online. If the final version of an accepted

paper does not sufficiently address the comments of the reviewers, then

it may be accompanied by a note from the program committee. Publication

at IMPACT will not prevent later publication in conferences or journals

of the presented work. However, simultaneous submission to IMPACT and

other workshop, conference, or journal is often prohibited by the policy

of other venues. For instance, a paper with significant overlap with

IMPACT submission cannot be sent to PLDI 2017 or any other overlapping

SIGPLAN event.

We will also continue the poster teasers we started last year. Authors

of the rejected papers that still plan to attend HiPEAC will have an

opportunity to present their submission in the HiPEAC poster session. We

encourage poster presentations by providing a short (3 min.) slot in the

workshop to advertise the posters. If possible, posters related to

IMPACT will be gathered at the poster session close to each other.

COMMITTEES:

Organizers and Program Chairs:

Mary Hall, University of Utah, USA

Tobias Grosser, ETH Zurich, Switzerland

Program Committee:

Uday Bondhugula (IISc Bangalore, India)

Cedric Bastoul (University of Strasbourg, France)

Samuel Bayliss (Xilinx, USA)

Albert Cohen (INRIA, France)

Philippe Clauss (University of Strasbourg, France)

Alain Darte (CNRS, France)

Paul Feautrier (ENS Lyon / INRIA, France)

Armin Groesslinger (University of Passau, Germany)

Alexandra Jimborean (Uppsala University, Sweden)

Paul Kelly (Imperial College London, UK)

Andreas Kloeckner (University of Illinois at Urbana-Champaign, USA)

Martin Kong (Rice University, USA)

Sanyam Mehta (Cray Inc., USA)

J. Ramanujam (Louisiana State University, USA)

P. Sadayappan (Ohio State University, USA)

Jun Shirako (Rice University, USA)

Michelle Strout (University of Arizona, USA)

Ramakrishna Upadrasta (IIT Hyderabad, USA)

Sven Verdoolaege (Polly Labs, Belgium)

David Wonnacott (Haverford College, USA)

Tomofumi Yuki (INRIA, France)

Hongbin Zheng (Xilinx Inc., USA)

Reply to AllReply to SenderForward

July 11, 2016

AST generation paper presented at PLDI 2016

Tobias Grosser presented Polyhedral AST generation is more than scanning polyhedra at PLDI 2016, which was held this year on June 13-17 on the beautiful shores of Santa Barbara.

In the ACM TOPLAS paper presented, we provide detailed insights into state-of-the-art polyhedral AST generation techniques used at the heart of Polly, GCC/Graphite, PPCG, PolyMage, as well as others, and discuss concepts – outside of classical AST generation – used to enable GPU tiling techniques such as hybrid-hexagonal tiling.

Abstract

Abstract mathematical representations such as integer polyhedra have shown to be useful to precisely analyze computational kernels and to express complex loop transformations. Such transformations rely on Abstract Syntax Tree (AST) generators to convert the mathematical representation back to an imperative program. Such generic AST generators avoid the need to resort to transformation-specific code generators, which may be very costly or technically difficult to develop as transformations become more complex. Existing AST generators have proven their effectiveness, but they hit limitations in more complex scenarios. Specifically, (1) they do not support or may fail to generate control flow for complex transformations using piecewise schedules or mappings involving modulo arithmetic; (2) they offer limited support for the specialization of the generated code exposing compact, straightline, vectorizable kernels with high arithmetic intensity necessary to exploit the peak performance of modern hardware; (3) they offer no support for memory layout transformations; (4) they provide insufficient control over the AST generation strategy, preventing their application to complex domain-specific optimizations.

We present a new AST generation approach that extends classical polyhedral scanning to the full generality of Presburger arithmetic, including existentially quantified variables and piecewise schedules, and introduce new optimizations for the detection of components and shifted strides. Not limiting ourselves to control flow generation, we expose functionality to generate AST expressions from arbitrary piecewise quasi-affine expressions which enables the use of our AST generator for data-layout transformations. We complement this with support for specialization by polyhedral unrolling, user-directed versioning, and specialization of AST expressions according to the location they are generated at, and complete this work with fine-grained user control over the AST generation strategies used. Using this generalized idea of AST generation, we present how to implement complex domain-specific transformations without the need to write specialized code generators, but instead relying on a generic AST generator parametrized to a specific problem domain.

Video

Slides

Online Playground

Try it out in the Polly Labs playground.

May 11, 2016

Two presentations at Spring School on Polyhedral Code Optimizations and Numerical Simulation

Sven Verdoolaege presented the lecture PPCG and Pencil compiler design at the Polyhedral Code Optimizations and Numerical Simulation spring school.

Abstract:

This course presents an overview of the inner workings of the polyhedral parallelizing compiler PPCG, which takes PENCIL code as input and produces either CUDA or OpenCL code. PENCIL is a subset of C99 with some extra builtins and pragmas that allow the user to express additional information that can be used by PPCG to produce better code. Some of the most prominent PENCIL features are described, along with their effect on PPCG. All the major steps of PPCG are covered, with a special focus on the choice of the core scheduling algorithm and its potential pitfalls.

Michael Kruse presented Using PPCG in Practice by Means of the pencilcc Wrapper

Abstract:

pencilcc is a compiler for PENCIL source code (similar to nvcc or mpicc) that uses PPCG under the hood, but that simplifies the user experience. PENCIL is a subset of C99 designed to facilitate automatic parallelization. The pencilcc compiler also comes with a pencil.h header and a runtime library called PRL. In this session we will learn how to compile PENCIL sources using pencilcc to OpenCL/CUDA and how to profile our code. We will also see the influence of PENCIL-specific annotations on the generated code as well as the positive effect on performance of using the PRL library.

May 07, 2016

PPCG 0.06 has been released

PPCG 0.06 was released containing various changes:

May 05, 2016

pet 0.09 has been released

pet 0.09 was released containing various changes:

May 04, 2016

isl 0.17 has been released

isl 0.17 was released containing various improvements:

isl 0.17.1 bug fix release

March 29, 2016

Five presentations at EuroLLVM and CC

At this years Euro LLVM and CC conference in Barcelona, a variety of Polly Labs related presentations have been given.

1. Analysing and Optimizing your Loops with Polly (Tutorial)

The Polly Loop Optimizer is a framework for the analysis and optimization of (possibly imperfectly) nested loops. It provides various transformations such as loop fusion, loop distribution, loop tiling as well as outer loop vectorization. In this tutorial we introduce the audience to the Polly loop optimizer and show how Polly can be used to analyze and improve the performance of their code. We start off with basic questions such as "Did Polly understand my loops?", "What information did Polly gather?", "How does the optimized loop nest look like?", "Can I provide more information to enable better optimizations?", and "How can I utilize Polly's analysis for other purposes?". Starting from these foundations we continue with a deeper look in more advanced uses of Polly: This includes the analysis and optimization of some larger benchmarks, the programming interfaces to Polly as well as the connection between Polly and other LLVM-IR passes. At the end of this tutorial we expect the audience to not only be able to optimize their codes with Polly, but also to have a first understanding of how to use it as a framework to implement their own loop transformations.

Presenters: Tobias Grosser and Johannes Doerfert

Feedback:

Tobias Grosser (the lead developer of Polly) and Johannes Doerfert gave a fantastic tutorial on Polly. ‘Polyhedral loop modelling’ is an intimidating term when you first encounter it, but their explanation was clear and effective. They also showed how to derive simple loop optimisations like loop interchange in this system and the more sophisticated optimisations they’ve developed on top.

http://www.wilfred.me.uk/blog/2016/03/22/llvm-developer-meeting-2016/

2. Polly - Loop Optimization Infrastructure (Birth of a Feather)

The Polly Loop Optimization infrastructure has seen active development throughout 2015 with contributions from a larger group of developers located at various places around the globe. With three successful Polly sessions at the US developers meeting and larger interest at the recent HiPEAC conference in Prag, we expect various Polly developers to be able to attend EuroLLVM. To facilitate in-persona collaboration between the core developers and to reach out to the wider loop optimization community, we propose a BoF session on Polly and the LLVM loop optimization infrastructure. Current hot topics are the usability of Polly in an '-O3' compiler pass sequence, profile driven optimizations as well as the definition of future development milestones. The Polly developers community will present ideas on these topics, but very much invites input from interested attendees.

Presenters: Tobias Grosser, Johannes Doerfert, Zino Benaissa

3. Molly: Parallelizing for Distributed Memory using LLVM (Presentation)

Motivated by modern day physics which in addition to experiments also tries to verify and deduce laws of nature by simulating the state-of-the-art physical models using large computers, we explore means of accelerating such simulations by improving the simulation programs they run. The primary focus is Lattice Quantum Chromodynamics (QCD), a branch of quantum field theory, running on IBM newest supercomputer, the Blue Gene/Q. Molly is an LLVM compiler extension, complementary to Polly, which optimizes the distribution of data and work between the nodes of a cluster machine such as Blue Gene/Q. Molly represents arrays using integer polyhedra and uses another already existing compiler extension Polly which represents statements and loops using polyhedra. When Molly knows how data is distributed among the nodes and where statements are executed, it adds code that manages the data flow between the nodes. Molly can also permute the order of data in memory. Molly's main task is to cluster data into sets that are sent to the same target into the same buffer because single transfers involve a massive overhead. We present an algorithm that minimizes the number of transfers for unparametrized loops using anti-chains of data flows. In addition, we implement a heuristic that takes into account how the programmer wrote the code. Asynchronous communication primitives are inserted right after the data is available respectively just before it is used. A runtime library implements these primitives using MPI. Molly manages to distribute any code that is representable in the polyhedral model, but does so best for stencils codes such as Lattice QCD. Compiled using Molly, the Lattice QCD stencil reaches 2.5% of the theoretical peak performance. The performance gap is mostly because all the other optimizations are missing, such as vectorization. Future versions of Molly may also effectively handle non-stencil codes and use make use of all the optimizations that make the manually optimized Lattice QCD stencil fast.

Presenter: Michael Kruse

4. How Polyhedral Modeling enables compilation to Heterogeneous Hardware (Presentation)

Polly, as a polyhedral loop optimizer for LLVM, is not only a sophisticated tool for data locality optimizations, but also has precise information about loop behavior that can be used to automatically generate accelerator code. In this presentation we present a set of new Polly features that have been introduced throughout the last two years (and as part of two GSoC projects) that enable the use of Polly in the context of compilation for heterogeneous systems. As part of this presentation we discuss how we use Polly to derive the precise memory footprints of compute regions for both flat arrays as well as multi-dimensional arrays of parametric size. We then present a new, high-level interface that allows for the automatic remapping of memory access functions to new locations or data-layouts and show how this functionality can be used to target software managed caches. Finally, we present our latest results in terms of automatic PTX/CUDA code generation using Polly as a core component.

Presenter: Tobias Grosser

5. Input Space Splitting for OpenCL (Technical Paper – CC 2016)

The performance of OpenCL programs suffers from memory and control flow divergence. Therefore, OpenCL compilers employ static analyses to identify non-divergent control flow and memory accesses in order to produce faster code. However, divergence is often input-dependent, hence can be observed for some, but not all inputs. In these cases, vectorizing compilers have to generate slow code because divergence can occur at run time. In this paper, we use a polyhedral abstraction to partition the input space of an OpenCL kernel. For each partition, divergence analysis produces more precise results i.e., it can classify more code parts as non-divergent. Consequently, specializing the kernel for the input space partitions allows for generating better SIMD code because of less divergence. We implemented our technique in an OpenCL driver for the AVX instruction set and evaluate it on a range of OpenCL benchmarks. We observe speed ups of up to 9x for irregular kernels over a state-of-the-art vectorizing OpenCL driver.

Presenter: Simon Moll

Authors: Simon Moll, Johannes Doerfert, Sebastian Hack

March 07, 2016

Spring School on Polyhedral Code Optimizations and Numerical Simulation announced

A spring school on Polyhedral Code Optimizations and Numerical Simulation was announced. Sven Verdoolaege from Polly Labs will be one of the speakers at this event. More details are available in this blog post .

January 20, 2016

Applied Polyhedral Compilation Tutorial at HiPEAC 2016

Sven Verdoolaege and Tobias Grosser gave today the Applied Polyhedral Compilation tutorial at HiPEAC 2016.

Content:

Polyhedral compilation is widely used in high-level synthesis tools and in production compilers such as gcc, LLVM and IBM/XL and is still being actively developed by several research groups across the globe, resulting in highly attended IMPACT workshops and a recent polyhedral school. It is based on the polyhedral model, a powerful abstraction for analyzing and transforming (parts of) programs that are "sufficiently regular". The key feature of this model is that it is instance based, allowing for a representation and treatment of individual dynamic executions of a statement inside a loop nest and/or individual array elements. In this tutorial, we explain how to apply the basic concepts of polyhedral compilation in different setting. The tutorial consists of two parts, one dealing with the use of polyhedral compilation inside LLVM/Polly, giving a detailed view into the core of Polly and enabling the attendees to write their own Polly based analysis and optimizations, and the other dealing with applications of set cardinality, focusing on problem modeling. In particular, we will address the following topics: - Recapitulation of the basic polyhedral concepts - Overview of LLVM's classical loop optimizers and vectorizers - Identification/analysis of compute kernels, memory access patterns, and data dependences in Polly - Code transformations in Polly - Overview of the basic counting operations - How to model problems involving counting and related operations This tutorial continues from the Polyhedral Compilation without Polyhedra tutorial presented at HiPEAC 2015. Some of the material will be drawn from the first half of a lecture presented at the polyhedral school.

January 19, 2016

Live-Range Reordering presentation at IMPACT 2016

Sven Verdoolaege presented the Live-Range Reordering paper at IMPACT 2016.

Abstract:

False dependences are caused by the reuse of memory to store different data. These false dependences severely constrain the schedule of statement instances, effectively serializing successive accesses to the same memory location. Several array expansion techniques have been proposed to eliminate some or all of these false dependences, enabling more reorderings of statement instances during loop nest transformations. However, array expansion is only relevant when complemented with a storage mapping optimization step, typically taking advantage of the fixed schedule set in earlier phases of the compilation, folding successive values into a compact set of contracted arrays. Furthermore, array expansion can result in memory footprint and locality damages that may not be recoverable through storage mapping optimization when intermediate transformation steps have abused the freedom offered by the removal of false dependences. Array expansion and storage mapping optimization are also complex procedures not found in most compilers, and the latter is moreover performed using suboptimal heuristics (particularly in the multi-array case). Finally, array expansion may not remove all false dependences when considering data-dependent control and access patterns. For all these reasons, it is desirable to explore alternatives to array expansion as a means to avoid the spurious serialization effect of false dependences. This serialization is unnecessary in general, as semantics preservation in presence of memory reuse only requires the absence of interference among live-ranges, an unordered constraint compatible with the their commutation. We present a technique to deal with memory reuse without serializing successive uses of memory, but also without increasing memory requirements or preventing important loop transformations such as loop distribution. The technique is generic, fine-grained (instancewise) and extends two recently proposed, more restrictive approaches. It has been systematically tested in PPCG and shown to be essential to the parallelizing compilation of a variety of loop nests, including large pencil programs with many scalar variables.

January 16, 2016

PPCG 0.05 has been released

PPCG 0.05 was released containing various changes:

January 16, 2016

barvinok 0.39 has been released

barvinok 0.39 was released containing various changes:

January 16, 2016

Presburger Formulas and Polyhedral Compilation v0.02 tutorial released

An initial version of the Presburger Formulas and Polyhedral Compilation tutorial has been made available. The current version focuses mainly on Presburger formulas and on operations that can be performed on sets and binary relations defined by such formulas, but also includes some information on polyhedral compilation, especially on dependence analysis.

January 15, 2016

pet 0.08 has been released

pet 0.08 was released containing various changes:

January 15, 2016

isl 0.16 has been released

isl 0.16 was released containing various improvements:

Together with isl, we also released PPCG 0.05, pet 0.08, and barvinok 0.39.

isl 0.16.1 bug fix release

December 15, 2015

Polly Labs wins HiPEAC Tech Transfer Award

We are proud to announce that Polly Labs won the HiPEAC Tech Transfer Award 2015.

The HiPEAC Tech Transfer Award has been awarded to Polly Labs for the continous development and deployment of the Polly Loop Optimizer and the isl Integer Set Library. Both software artifacts are not only widely used in research, but are today part of the Snapdragon LLVM Compiler for Android shipped by Qualcomm Inc. and are investigated by other Polly Labs industry partners.

This achievement was only possible due to the regular contributions of the growing Polly open source community. We want to thank especially Johannes Doerfert, who was a leading community contributor in 2015 as well as all the other contributers we got over time: Alexandre Isoard, Armin Groesslinger, Chris Jenneisch, Christian Bielert, Dmitry N. Mikushin, Jack Howarth, Karthik Senthil, Ajith Pandel, Lawrence Hu, Matthew Simpson, Peter Conn, Pratik Bhatu, Richard Membarth, Roal Jordans, Sam Novak, Star Tan, Yabin Hu, Adrian Prantl, Andreas Simbuerger, Andy Gibbs, Anton Korobeynikov, Benjamin Kramer, Bill Wendling, Chandler Carruth, Craig Topper, Daniel Dunbar, Daniel Jasper, Zino Benaissa, David Blaikie, David Peixotto, Duncan P. N. Exon Smith, Hongbin Zheng, Logan Chien, Matt Arsenault, Micah Villmow, NAKAMURA Takumi, Patrik Hägglund, Rafael Espindola, Raghesh Aloor, Roman Gareev, Saleem Abdulrasool, Sameer Sahasrabuddhe, Sebastian Pop, Sumanth Gundapaneni, Sunil Srivastava, Sylvestre Ledru, Tanya Lattner, Wei Mi and various others we would have very much deserved to be listed here.

As part of Polly Labs, Albert Cohen, Sven Verdoolage and Michael Kruse from the INRIA Parkas team as well as Torsten Hoefler and Tobias Grosser from the Scalable Parallel Computing Lab at ETH Zurich we are very happy to have received this award and would like to thank again ARM for their great support.